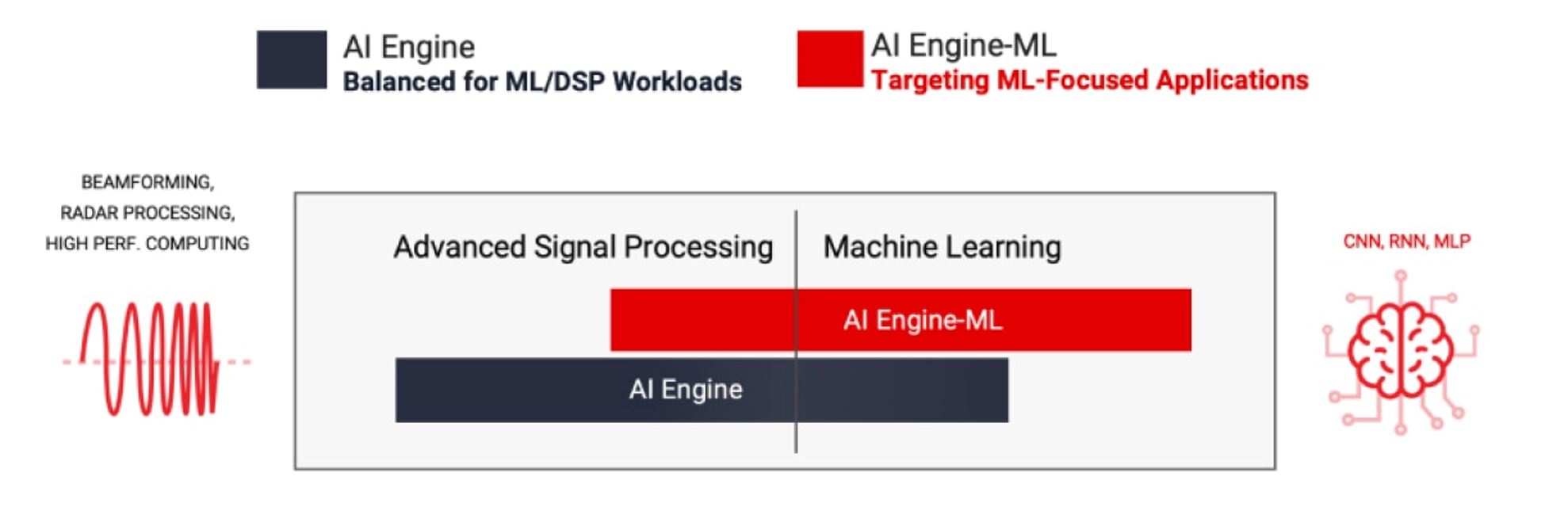

The AMD Versal architecture introduced a new adaptable compute domain, the AI Engine. The AI Engine is a powerful coprocessor that can be used to implement vectorized algorithms that otherwise would be resource intensive on a traditional FPGA. While the amount of AI Engine tiles varies across the Versal lineup, the AI Engine itself is available in two different variants. These are the AIE and AIE-ML.

Development boards for both AI Engines are currently available. They represent a powerful new tool for performing DSP, ML, and other custom algorithms applicable to edge computers, data centers, embedded applications, and more.

While AMD specifically recommends the AIE-ML for machine learning, and the AIE for digital signal processing, this generalization does not capture the differences between these parts. Which one will offer the best performance for your application depends on some key characteristics of your application.

This article looks at these two variants and provides some helpful tips on which is right for your use case.

What is Versal?

The AMD Versal adaptive SoC represents the highest end, and most performance capable, FPGA-equipped devices AMD sells. The Versal architecture is not just an FPGA. It’s a system on chip (SoC) that includes a fully-software-programmable ARM processor, programmable logic, dedicated IO capable of hundreds of gigabits of high bandwidth standards, and a vector co-processor array or accelerator with a unique architecture that allows for acceleration that is only comparable to a GPU. This is what is defined as the AI Engine.

Please Note: The term “Versal” is an adjective applied to a family of adaptive SoCs from AMD.

What is an AI Engine?

The AI Engine is neither a CPU nor a GPU but a vectorized processor array available on select Versal devices. AI Engines are best described as “math accelerators” that can be programmed to implement off-the-shelf or custom algorithms for specific applications. Common uses include FFTs, beamformers, FIR filters, channelizers, video preprocessing and analysis, matrix math operations, and more.

The AI Engine is utilized across various industries, including artificial intelligence, aerospace, signal intelligence, autonomous driving, wireless communications, medical imaging, and other applications requiring high-data-rate, time-critical processing.

Read more about Versal AI Engines here.

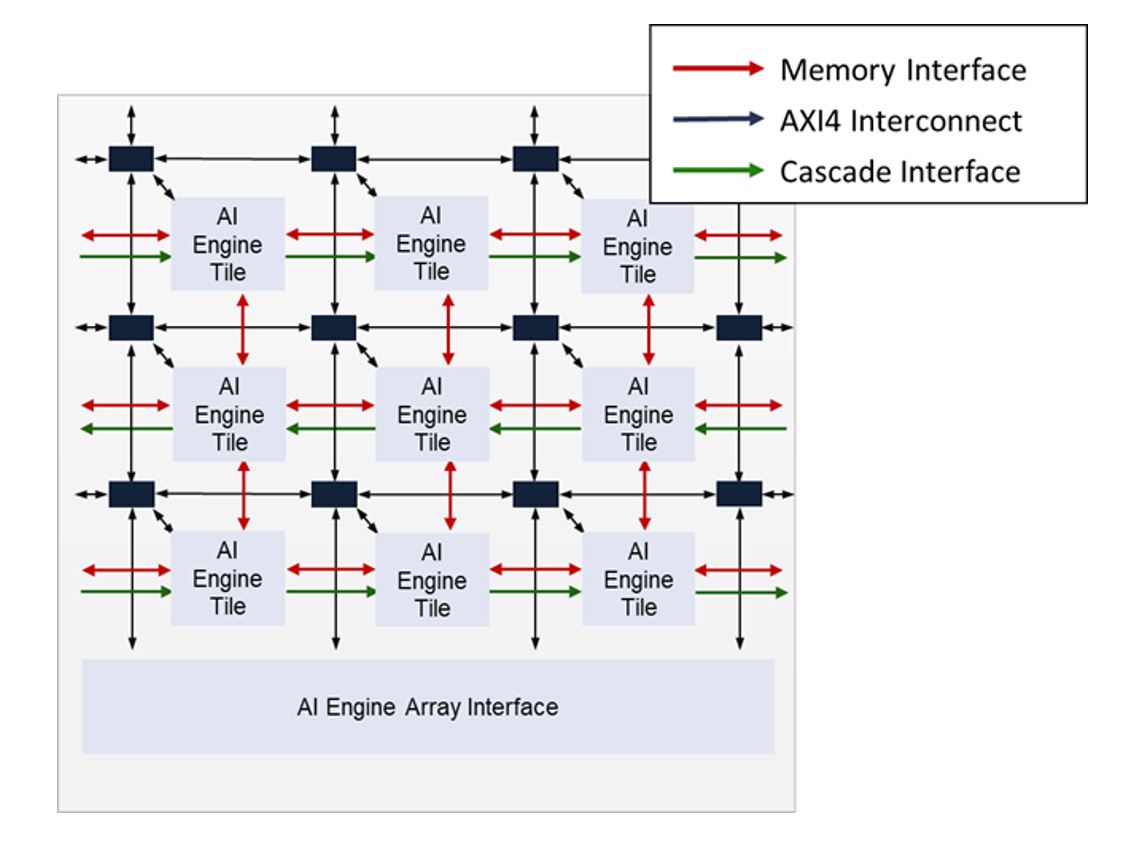

AI Engine Array

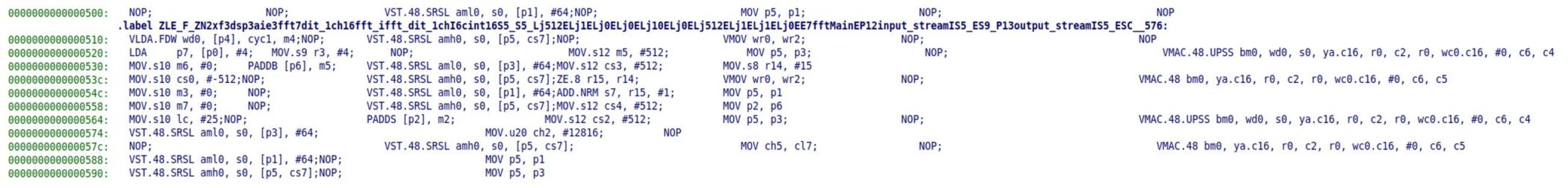

An AI Engine Array consists of multiple “tiles.” Each AI Engine tile is a specialized coprocessor known as a Very Long Instruction Word (VLIW), Single Instruction Multiple Data (SIMD) processor. These coprocessors are optimized for vectorized multiply-accumulate (MAC) operations, delivering low latency and high throughput.

Unlike a standard computer processor, which can perform a single operation on up to 64 bits of data per clock cycle, an AI Engine core can execute a multiply operation on up to 1024 bits of vectorized data per clock cycle. Additionally, the AI Engine can perform mathematical operations, memory loads, and memory stores simultaneously within the same clock cycle. These characteristics are unique to VLIW SIMD processors like the AI Engine. The combination of a wide data path, operational concurrency, and a maximum clock speed of 1.3 GHz enables a single AI Engine tile to process data at gigabytes per second (GB/s).

AI Engine vs. CPUs and GPUs

Vector processors, like the AI Engine, function similarly to GPUs but offer greater flexibility and complexity in bulk data processing. However, they have less support for function calls and nested code compared to CPUs. As a result, an AI Engine cannot run an operating system or efficiently parse a JSON file but excels at executing complex algorithms that may not fully utilize a GPU.

Comparing the AIE and AIE-ML

The differences between AIE and AIE-ML vary across several characteristics. However, the impact of these differences on design performance depends on the specific algorithm and implementation details.

The differences are summarized in the table below:

| Category | AIE Tile | AIE-ML Tile |

|---|---|---|

| Per Tile Data Memory | 32 KiB | 64 KiB + (between 125 KiB – 750 KiB shared) |

| Supported Data Types | int8, int16, int32, float | int4, int8, int16, int32 (partial) |

| Floating Point Support | 32-bit float | 16-bit bfloat, 32-bit float (partial) |

| Interconnections | Shared Memory Buffer. 2 streaming inputs, 2 streaming outputs | Shared Memory Buffer. 1 streaming output |

| Max Clock Speed | 1.3 GHz | 1 GHz |

The key differences between the two AIE variants are the amount of data memory available per AI Engine tile—AIE-ML has significantly more—and the supported data types, with AIE offering better support for 32-bit representations.

Data Memory

One of the key features of the AIE-ML tiles is the additional per-core memory they make available. In machine learning inference algorithms, it’s common to have a set of “weights”, a set of data values that are known during development and are mathematically applied to the incoming data at run time.

One approach to perform such an algorithm in the AI Engine is to store these weights in the data memory of each tile. This leads to a situation where the more memory available on a single AIE tile, the higher precision and complexity of algorithm can be performed. This can also occur with other algorithms. The way to optimize the design is to maximize the memory available per tile. A single AIE tile has 32 KiB memory dedicated per core. However, an AIE core can use the memory of three neighboring tiles. This means an AIE design could have a single AIE core that used up to 128 KiB of data memory, though this means some tiles will have no memory available and will not be able to perform any computation.

AI Engine-ML Architecture

The AIE-ML architecture helps to alleviate this memory bottleneck by doubling the memory within each tile to 64 KiB. This means an AIE-ML design could have a single AIE core that uses up to 256 KiB of data memory, with three other cores left without functioning memory.

AI Engine-ML MEMORY

The AIE-ML also has dedicated memory tiles. Instead of having an additional AIE-ML core, these memory tiles each provide 512 KiB of memory and 6 streaming interfaces. These allow access to the memory by any tile in the array. The number of memory tiles differs based on the specific Versal part. Note that the Versal Premium parts do not have any memory tiles. For the Versal parts that contain memory tiles, this represents between 6 MiB and 38 MiB available for data storage within the AI Engine. For a tile to access the shared memory, the data must be passed over the streaming interconnect. This means the shared memory has additional latency than the memory built into each tile.

streaming interfaces

Another key difference in memory handling is the number of streaming interfaces per tile. In the AI Engine, data moves between neighboring tiles via shared memory buffers, a mechanism present in both AIE and AIE-ML. However, communication between non-neighboring tiles requires streaming interconnects.

The AIE variant supports two input and two output streaming interfaces per tile, while AIE-ML has only one input/output pair per tile. Streaming interfaces have lower throughput and higher latency than data buffering but free up tile memory that would otherwise be used for input and output buffers. Despite having fewer streaming interfaces, AIE-ML compensates with twice the dedicated memory per tile.

Data Types in AI Engines and AI Engine-ML

Another significant feature difference between the two AIE variants is the supported data types. The AI Engine’s represent data as vectors of a specific data type. Both variants of the AIE support complex data types with vectors that are up to 1024 bits long.

The AIE has full support for 32-bit integer math and supports IEEE 754 single-precision floats. While the AIE does support IEEE floats, the implementation does not meet the specification for numbers and subnormal numbers. Realistically, this can cause a rounding error between floating point outputs from AIE compared running the same algorithm on a CPU with full IEEE 754 support.

The AIE-ML variant lacks support for 32-bit integer vector manipulation or IEEE floating point. This means both 32-bit integer and IEEE floating point math can be done on the AIE-ML, but at worse performance than the AIE. The AIE-ML does support two new data types, int4 for very low quantization math, and bfloat16 for a more performant floating-point representation. The bfloat data type has less precision than an IEEE floating point number, but its 16-bit size allows for high performance operations.

Overall, these differences mean that the AIE-ML has more abilities to process values in 16-bit representations or less, while the AIE has better support for 32-bit representations.

Conclusion

The AMD Versal AI Engine accelerates vectorized algorithms using a fundamentally different approach from traditional CPU and GPU implementations. It delivers high performance, high reliability, and time-critical processing—tasks that would otherwise require ASICs or significant FPGA resources—while maintaining power efficiency and rapid reprogrammability. This enables the AI Engine to offload data operations from the CPU or PL, executing them faster and with lower power consumption, freeing up those resources for non-vectorizable tasks.

While AIE-ML is generally optimized for Machine Learning and AIE for Digital Signal Processing, these distinctions do not fully capture their architectural differences. Choosing the right variant requires careful consideration of your specific design requirements.

Additional Resources

To have BLT evaluate the best Versal part for your application, contact us here.

AIE-ML User Guide

BLT’s Swift Launch for Versal designs. Swift Launch is an AI Engine software framework that simplifies the process of developing and optimizing AI Engine designs. The Swift Launch framework helps you prototype AI Engine designs quickly, shortening development time.