The AMD Versal architecture introduced a brand new compute domain, the AI Engine. The Versal AI Engine is a powerful coprocessor that can be used to implement vectorized algorithms that wouldn’t otherwise be possible on an FPGA. The AI Engine’s architecture, a systolic array of SIMD VLIW coprocessors, represents a brand new architecture that is unlike a CPU or a GPU. As such, it has special design considerations that should be considered when making use of this technology.

The AI Engine comes in two variants, the AIE and AIE-ML. This post focuses on the AIE. We’ll post about the AIE-ML soon.

The differences between the two different versions of the AI Engine impact the performance of and specifics of how to implement an algorithm. The only way to determine the exact impact these differences will make on a specific application is to prototype a design for that application targeting a specific Versal device. This article includes some design considerations to help you know what to consider when designing for the AI Engine.

AI Engine Utilization

Choosing a Part

An important consideration when choosing a Versal part is the number of AI Engine tiles available on that part. In the Versal lineup, the only chips that include AI Engines are the AI Edge and AI Core series of devices. Each device either contains AIE or AIE-ML versions of the AI Engine. The only exceptions are the Versal Premium VP2502 and VP2802, which come equipped with a combination of both AIE-DSP tiles ― 472 of them, and AIE-ML tiles ― 118 of them. The number of AI Engine tiles can vary from only 8 AIE-ML tiles on the Versal AI Edge VE2002, to up to 400 AIE tiles on the Versal AI Core VC1902. When selecting a Versal board, it is important to have in mind an estimate of how many AI Engines you are intending to use in your design.

Read more about choosing between AI Engines and AI Engine-MLs here.

How Many AI Engines Do You Need?

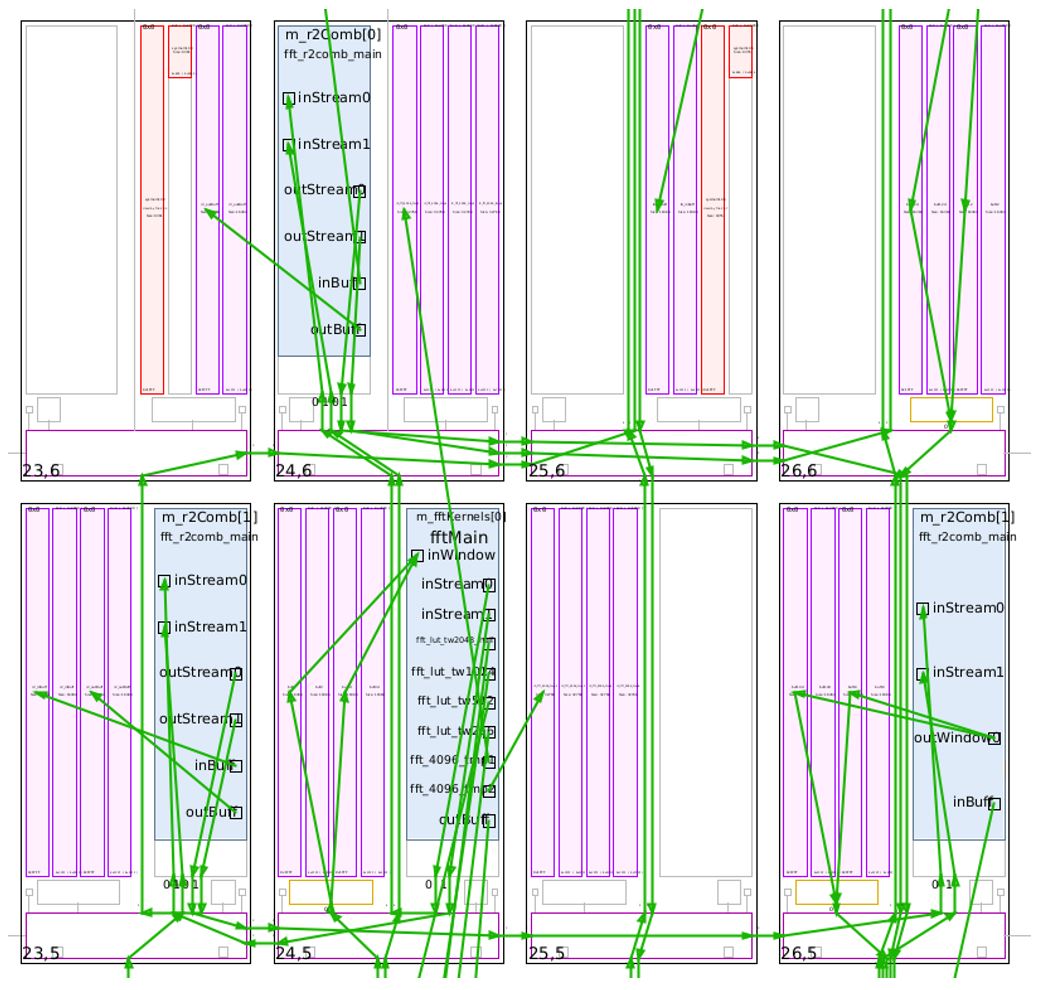

The number of AI Engines you need for a specific design can be difficult to estimate, since it is often not realistic for a specific algorithm to utilize all of the AI Engine cores at the same time. This limitation occurs because it often only makes sense for AI Engine tiles to send and receive data from their neighboring tiles. If the flow of data passes through multiple AI Engine tiles for a complex algorithm, each stage of data manipulation may include multiple AI Engines. This can easily lead to a situation where some AI Engine tiles cannot be used, since there is no way for their neighboring tiles to send and receive data from them.

For some algorithms, such as general matrix multiplication (GEMM) it is possible to pass data through the array, such that all AI Engines are utilized. But, this is often not the case for algorithms that require multiple stages of computation. While it is up to the developer to partition the design into separate AI Engines, the AMD-provided AIE Compiler includes a mapper that will find the best mapping of AI Engine tiles to fit a given design into the AI Engine array. Additionally, manual constraints are available for the programmer to control the mapping behavior. For designs that do try to use all or most of the AI Engine cores, the mapper can take substantially longer to find a valid solution to route data through the AI Engine, and manual constraints may be required.

Interconnects

The Versal AI Engine does support a system of streaming interconnects that allows data to be sent between non-neighboring AI Engines tiles, making it possible to utilize tiles without neighbors that can pass them data. However, the streaming interface has a lower data throughput than passing data between neighboring tiles, and requires the developer to specifically utilize the streaming interfaces in the design.

Visualization of the AI Engine Array for a 16K point FFT. The four blue rectangles represent tiles with an AI Engine core that is running code. The four remaining tiles' memory is being used, but the AI Engine core itself is not used. The green arrows represent the data flow from one AI Engine to the next.

AI Engine Data Control

Another important consideration to make when designing for the AI Engines is how the AI Engines will be controlled. The AI Engines do not have the ability to read in data to process on their own. Instead, data must be passed to the AI Engine via the PLIO or GMIO interfaces.

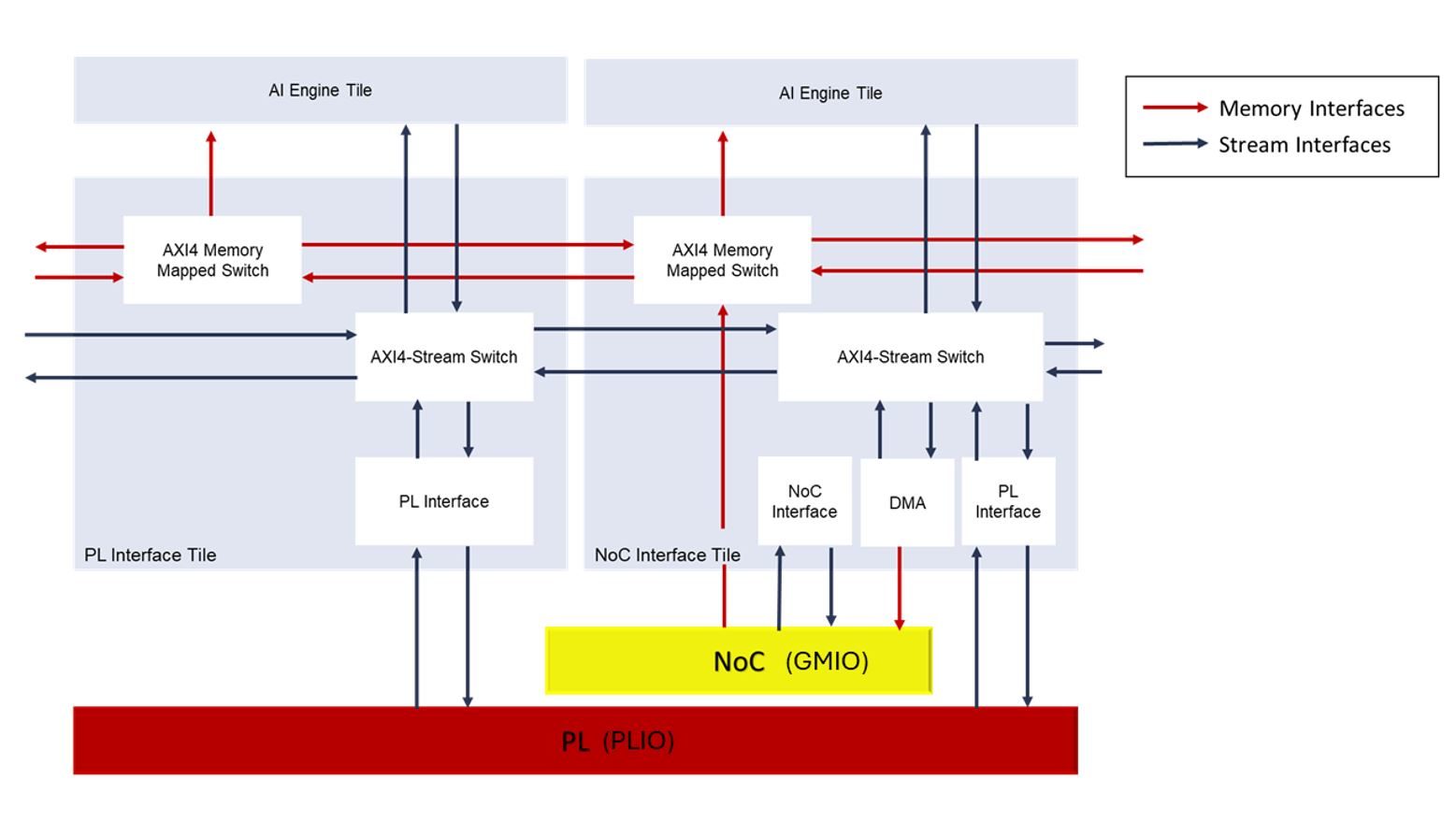

A depiction of part of the AI Engine and its interface the NoC, and PL logic. This is how data enters and leaves the AI Engine.

Depending on the Versal part, there will be several GMIO and PLIO interfaces available. A single GMIO or PLIO interface can be used to pass data to a single AI Engine tile. Multiple interfaces can be used concurrently to increase the throughput of data travelling to and from the AI Engines.

PLIO

Programmable Logic IO (PLIO) is a set of direct interconnects between the FPGA fabric and the AI Engine tiles. PLIO allows for logic in the PL to send and receive data from the AI Engine at the lowest possible latency making use of an AXI stream interface.

GMIO

General Purpose IO (GMIO) is another method of moving data to and from the AI Engine. GMIO allows the ARM processor on the Versal to command the NoC to act as a DMA, moving data from the Versal unified memory into the AI Engines for processing. This method has higher latency than using PLIO but allows for the AI Engine to be directly controlled by the processor without requiring PL resources to be devoted to AI Engine operations.

Using GMIO may limit throughput of passing data through the AI Engine. As, notably, now all data that is passed into or out of the AI Engine must first be copied by the processor into non-cached memory. This means the throughput rate of data going through the AI Engine may be bottlenecked by how fast the Versal’s A72 Cortex ARM processor can copy data. For a complex algorithm where the throughput rate of data is lower, but the AI Engine is still utilized, this may not be a problem. Higher throughput rates may be achieved by using PLIO.

Custom PL Logic

For applications where the processor should control the AI Engines, but better performance is desired than can be accomplished with GMIO, it may be possible to use custom PL logic to coordinate with the processor and allow the PL to utilize PLIO to feed data to the AI Engines. Such a setup would allow for the AI Engine to be controlled by the processor while overcoming the limitations of the PLIO. However, this concept would require application specific development to implement and uses PL resources.

Conclusion

The AMD Versal architecture’s AI Engine offers a way of accelerating vectorized algorithms with a completely different approach than normal CPU and GPU implementations. As such, the AI Engines represent some unique design challenges. They require knowledge and skill to fully utilize the power of the vector processor in the final design. In this article, we have discussed just two of those unique aspects of the AI Engine to consider when designing for the AI Engine.

Additional Resources

To have BLT evaluate the best Versal part for your application, contact us here.

BLT’s Swift Launch for Versal designs. Swift Launch is an AI Engine software framework that simplifies the process of developing and optimizing AI Engine designs. The Swift Launch framework can help you prototype AI Engine designs quickly, shortening development time.